Safety consultants are on the alert for the following evolution of social engineering in enterprise settings: deepfake employment interviews. The newest pattern affords a glimpse into the longer term arsenal of criminals who use convincing, faked personae towards enterprise customers to steal knowledge and commit fraud.

The priority comes following a brand new advisory this week from the FBI Web Crime Criticism Middle (IC3), which warned of elevated exercise from fraudsters attempting to recreation the web interview course of for remote-work positions. The advisory stated that criminals are utilizing a mixture of deepfake movies and stolen private knowledge to misrepresent themselves and acquire employment in a variety of work-from-home positions that embrace data know-how, pc programming, database upkeep, and software-related job capabilities.

Federal law-enforcement officers stated within the advisory that they’ve acquired a rash of complaints from companies.

“In these interviews, the actions and lip motion of the particular person seen interviewed on-camera don’t fully coordinate with the audio of the particular person talking,” the advisory stated. “At occasions, actions corresponding to coughing, sneezing, or different auditory actions are usually not aligned with what’s offered visually.”

The complaints additionally famous that criminals had been utilizing stolen personally identifiable data (PII) along side these pretend movies to raised impersonate candidates, with later background checks digging up discrepancies between the person who interviewed and the id offered within the software.

Potential Motives of Deepfake Assaults

Whereas the advisory didn’t specify the motives for these assaults, it did be aware that the positions utilized for by these fraudsters had been ones with some stage of company entry to delicate knowledge or techniques.

Thus, safety consultants consider some of the apparent objectives in deepfaking one’s method by means of a distant interview is to get a prison right into a place to infiltrate a corporation for something from company espionage to widespread theft.

“Notably, some reported positions embrace entry to buyer PII, monetary knowledge, company IT databases and/or proprietary data,” the advisory stated.

“A fraudster that hooks a distant job takes a number of big steps towards stealing the group’s knowledge crown jewels or locking them up for ransomware,” says Gil Dabah, co-founder and CEO of Piiano. “Now they’re an insider menace and far tougher to detect.”

Moreover, short-term impersonation may also be a method for candidates with a “tainted private profile” to get previous safety checks, says DJ Sampath, co-founder and CEO of Armorblox.

“These deepfake profiles are set as much as bypass the checks and balances to get by means of the corporate’s recruitment coverage,” he says.

There’s potential that along with getting entry for stealing data, overseas actors could possibly be making an attempt to deepfake their method into US companies to fund different hacking enterprises.

“This FBI safety warning is considered one of many which were reported by federal companies previously a number of months. Just lately, the US Treasury, State Division, and FBI launched an official warning indicating that firms have to be cautious of North Korean IT employees pretending to be freelance contractors to infiltrate firms and accumulate income for his or her nation,” explains Stuart Wells, CTO of Jumio. “Organizations that unknowingly pay North Korean hackers doubtlessly face authorized penalties and violate authorities sanctions.”

What This Means for CISOs

A whole lot of the deepfake warnings of the previous few years have been primarily round political or social points. Nonetheless, this newest evolution in using artificial media by criminals factors to the rising relevance of deepfake detection in enterprise settings.

“I believe it is a legitimate concern,” says Dr. Amit Roy-Chowdhury, professor {of electrical} and pc engineering at College of California at Riverside. “Doing a deepfake video at some point of a gathering is difficult and comparatively simple to detect. Nonetheless, small firms might not have the know-how to have the ability to do that detection and therefore could also be fooled by the deepfake movies. Deepfakes, particularly photos, will be very convincing and if paired with private knowledge can be utilized to create office fraud.”

Sampath warns that some of the disconcerting elements of this assault is using stolen PII to assist with the impersonation.

“Because the prevalence of the DarkNet with compromised credentials continues to develop, we should always anticipate these malicious threats to proceed in scale,” he says. “CISOs must go the additional mile to improve their safety posture relating to background checks in recruiting. Fairly often these processes are outsourced, and a tighter process is warranted to mitigate these dangers.”

Future Deepfake Considerations

Previous to this, essentially the most public examples of prison use of deepfakes in company settings have been as a instrument to assist enterprise electronic mail compromise (BEC) assaults. For instance, in 2019 an attacker used deepfake software program to impersonate the voice of a German firm’s CEO to persuade one other government on the firm to urgently ship a wire switch of $243,000 in assist of a made-up enterprise emergency. Extra dramatically, final fall a prison used deepfake audio and solid electronic mail to persuade an worker of a United Arab Emirates firm to switch $35 million to an account owned by the unhealthy guys, tricking the sufferer into considering it was in assist of an organization acquisition.

In line with Matthew Canham, CEO of Past Layer 7 and a school member at George Mason College, attackers are more and more going to make use of deepfake know-how as a artistic instrument of their arsenals to assist make their social engineering makes an attempt simpler.

“Artificial media like deepfakes goes to simply take social engineering to a different stage,” says Canham, who final 12 months at Black Hat offered analysis on countermeasures to fight deepfake know-how.

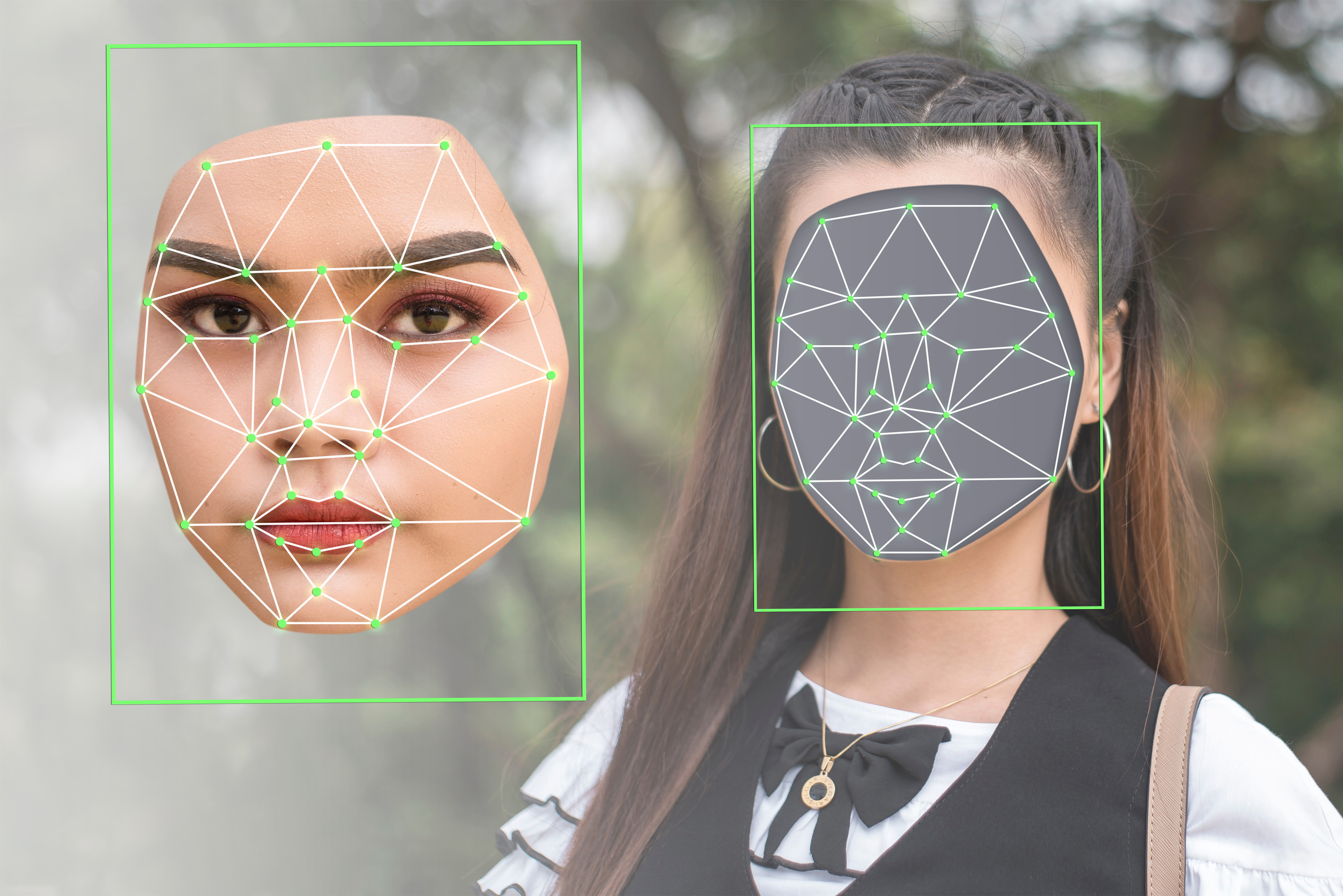

The excellent news is that researchers like Canham and Roy-Chowdhury are making headway on developing with detection and countermeasures for deepfakes. In Might, Roy-Chowdhury’s workforce developed a framework for detecting manipulated facial expressions in deepfaked movies with unprecedented ranges of accuracy.

He believes that new strategies of detection like this may be put into use comparatively rapidly by the cybersecurity group.

“I believe they are often operationalized within the brief time period — one or two years — with collaboration with skilled software program growth that may take the analysis to the software program product section,” he says.