A while again I wrote a put up about Methods to enhance efficiency of CIFAR-10 classifier, primarily based on issues I realized from New York College’s Deep Studying with Pytorch course taught by Yann Le Cun and Alfredo Canziani. The methods I coated had been conveniently situated on a single slide in one of many lectures. Shortly thereafter, I realized of some extra methods that had been talked about in passing, so I figured it is likely to be fascinating to attempt these out as effectively to see how effectively they labored. That is the topic of this weblog put up.

As earlier than, the methods themselves usually are not radically new or something, my curiosity in implementing these strategies is as a lot to learn to do it utilizing Pytorch as pushed by curiosity about their effectiveness on the classification process. The duty is comparatively easy — the CIFAR-10 dataset accommodates about 1000 (800 coaching and 200 check) low decision 32×32 RBG photos, and the duty is to categorise them as one in all 10 distinct lessons. The community we use is tailored from the CNN described within the Tensorflow CNN tutorial.

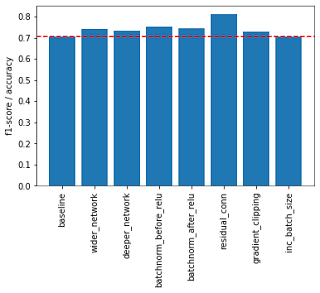

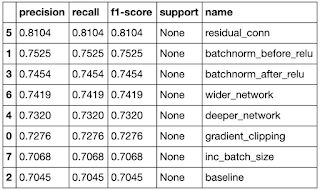

We begin with a baseline community that’s equivalent to that described within the Tensorflow CNN tutorial. We practice the community utilizing the coaching set and consider the educated community utilizing classification accuracy (micro-F1 rating) on the check set. All fashions had been educated for 10 epochs utilizing the Adam optimizer. Listed here are the totally different situations I attempted.

- Baseline — This can be a CNN with three layers of convolutions and max-pooling, adopted by a two layer classification head. It makes use of the Coss Entropy loss operate and the Adam optimizer with a hard and fast studying fee of 1e-3. The enter filter measurement is 3 (RGB photos), and the convolution layers create 32, 64, and 64 channels respectively. The ensuing tensor is then flattened and handed by means of two linear layers to foretell softmax chances for every of the ten lessons. The variety of trainable parameters on this community is 122,570 and it achieves an accuracy rating of 0.705.

- Wider Community — The scale of the penultimate layer within the feedforward or dense a part of the community was widened from 64 to 512, growing the variety of trainable parameters to 586,250 and a rating of 0.742.

- Deeper Community — Much like the earlier method, the variety of layers within the dense a part of the community was elevated from a single layers of measurement 64 to 2 layers of measurement (512, 256). As with the earlier method, this elevated the variety of trainable parameters to 715,018 and a rating of 0.732.

- Batch Normalization (earlier than ReLU) — This trick provides a Batch Normalization layer after every convolution layer. There may be some confusion on whether or not to place the BatchNorm earlier than the ReLU acivation or after, so I attempted each methods. On this configuration, the BatchNorm layer is positioned earlier than the ReLU activation, i.e., every convolution block appears like (Conv2d → BatchNorm2d → ReLU → MaxPool2d). The BatchNorm layer capabilities as a regularizer and will increase the variety of trainable parameters barely to 122,890 and provides a rating of 0.752. Between the 2 setups (this and the one under), this appears to be the higher setup to make use of primarily based on my outcomes.

- Batch Normalization (after ReLU) — This setup is equivalent to the earlier one, besides that the BatchNorm layer is positioned after the ReLU, i.e. every convolution block now appears like (Conv2d → ReLU → BatchNorm2d → MaxPool2d). This configuration offers a rating of 0.745, which is lower than the rating from the earlier setup.

- Residual Connection — This method entails switching every Convolution block (Conv2d → ReLU → MaxPool2d) with a fundamental ResNet block composed of two Convolution layers with a shortcut residual connection, adopted by ReLU and MaxPool. This will increase the variety of trainable parameters to 212,714, a way more modest improve in comparison with the Wider and Deeper Community approaches, however with a a lot larger rating enhance (the very best amongst all of the approaches tried) of 0.810.

- Gradient Clipping — Gradient Clipping is extra usually used with Recurrent Networks, however serves an identical operate as BatchNorm. It retains the gradients from exploding. It’s utilized as an adjustment through the coaching loop and doesn’t create new trinable paramters. It gave a a lot modest achieve with a rating of 0.728.

- Improve Batch Measurement — Growing the batch measurement from 64 to 128 didn’t lead to important change in rating, it went up from 0.705 to 0.707.

The code for these experiments is on the market within the pocket book on the hyperlink under. It was run on Colab (Google Colaboratory) on a (free) GPU occasion. You’ll be able to rerun the code your self on Colab utilizing the Open in Colab button on the high of the pocket book.

The outcomes of the analysis for every of the totally different methods are summarized within the barchart and desk under. All of the methods outperformed the baseline, however one of the best performer was the one utilizing residual connections, which outperformed the baseline by round 14 proportion factors. Different notable performers had been BatchNorm, and placing it earlier than the ReLU activation labored higher than placing it after. Making the dense head wider and deeper additionally labored effectively to extend efficiency.

One different factor I checked out was parameter effectivity. Widening and Deepening the Dense head layers precipitated the biggest improve within the variety of trainable parameters, however didn’t result in a corresponding improve in efficiency. However, including Batchnorm gave a efficiency enhance with a small improve within the variety of parameters. The residual connection method did improve the variety of parameters considerably however gave a a lot bigger enhance in efficiency.

And thats all I had for at present. It was enjoyable to leverage the dynamic nature of Pytorch to construct comparatively advanced fashions with out too many extra strains of code. I hope you discovered it helpful.

Edit 2021-03-28: I had a bug in my pocket book the place I used to be creating a further layer within the FCN head that I did not intend to have, so I mounted that and re-ran the outcomes, which gave totally different absolute numbers however largely retained the identical rankings. The up to date pocket book is on the market on Github by way of the offered hyperlink, and the numbers have been up to date within the weblog put up.