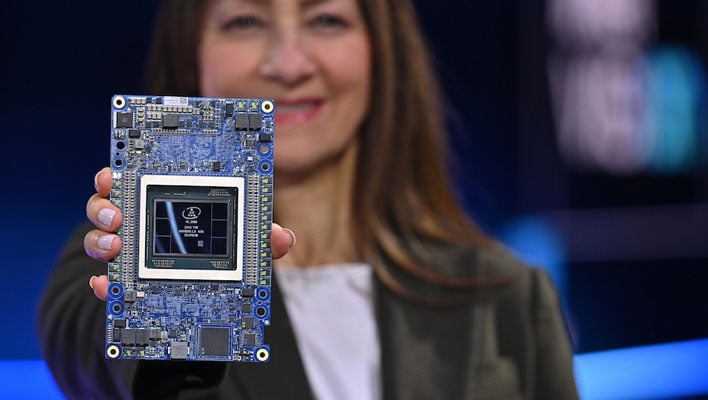

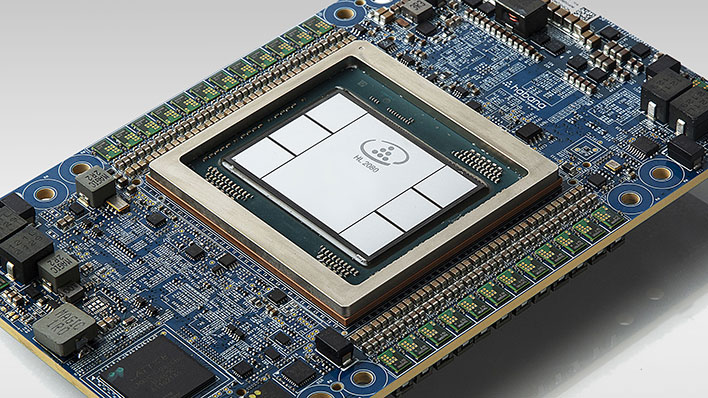

Gaudi2 is a giant slab silicon, as proven within the picture above. The Guadi2 processor within the middle is flanked by six excessive bandwidth reminiscence tiles on exterior, successfully tripling the in-package reminiscence capability from 32GB within the earlier model to 96GB of HBM2E within the present iteration, serving up 2.45TB/s of bandwidth.

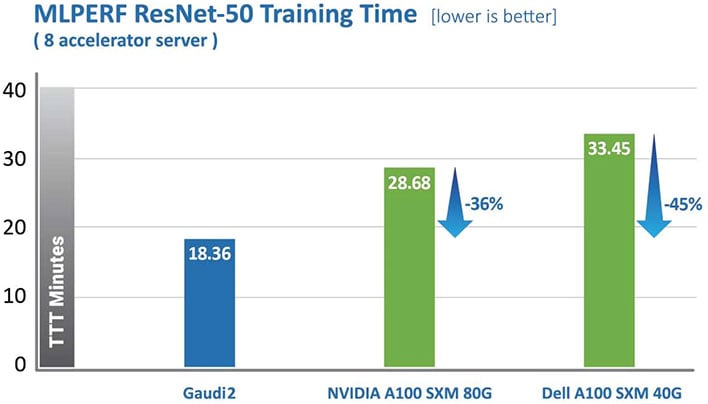

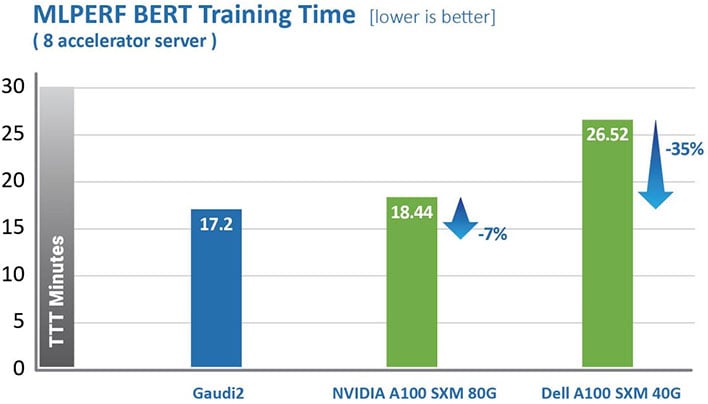

Intel’s newest benchmarks present what it sees as “dramatic developments in time-to-train (TTT)” over the first-gen Gaudi chip. Much more importantly (for Intel), its newest MLPerf submission spotlight a number of efficiency wins over NVIDIA’s A100-80G for eight accelerators on imaginative and prescient and language coaching fashions.

Intel and NVIDIA each have huge vested pursuits in dominating the information middle. MLPerf is an trade commonplace benchmark that NVIDIA usually trumpets its personal efficiency wins, so it is a honest playground. It is also simple to see why Intel is eager on flexing what it has been in a position to accomplish with its Gaudi2 processor.

Intel Habana Gaudi2 Versus NVIDIA A100 In MLPerf

Two of the highlights Intel confirmed off have been ResNet-50 and BERT coaching occasions. ResNet is a imaginative and prescient/picture recognition mannequin whereas BERT is for pure language processing. These are key areas in AI and ML, and each are trade commonplace fashions.

In an eight-accelerator server, Intel’s benchmarks spotlight as much as 45 p.c higher efficiency in ResNet and as much as 35 p.c in BERT, in comparison with NVIDIA’s A100 GPU. And in comparison with the first-gen Gaudi chip, we’re taking a look at positive factors of as much as 3X and and 4.7X, respectively.

“These advances may be attributed to the transition to 7-nanometer course of from 16nm, tripling the variety of Tensor Processor Cores, rising the GEMM engine compute capability, tripling the in-package excessive bandwidth reminiscence capability, rising bandwidth and doubling the SRAM dimension,” Intel says.

“For imaginative and prescient fashions, Gaudi2 has a brand new characteristic within the type of an built-in media engine, which operates independently and might deal with your complete pre-processing pipe for compressed imaging, together with information augmentation required for AI coaching,” Intel provides.

The Gaudi household compute structure is heterogeneous and contains two compute engines, these being a Matrix Multiplication Engine (MME) and a totally programmable Tensor Processor Core (TPC) cluster. The previous handles perations that may be lowered to Matrix Multiplications, whereas the latter is tailored for deep studying operations to speed up every little thing else.